I have been reading Rendering with Radiance and I am eager to learn how to define my own materials accurately. However, as I continue to read, I have many unanswered questions! I’ve listed the basic ones below, and I hope the community can help clear things up for me.

Question 1: I’d like to confirm the limitations of the macbethcal measurement technique. Is my summary below accurate?

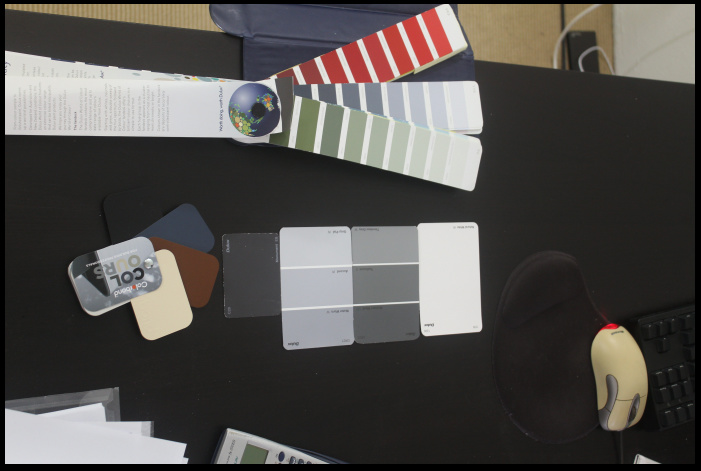

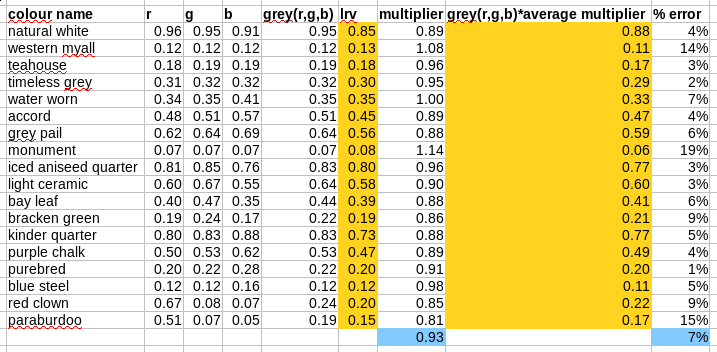

I can photograph a Macbeth chart under the same photography settings and environment as my object. The macbethcal program will detect the chart and generate a calibration function, which I can run on the photo of my object, and sample the average RGB values from there. I can then use these RGB nubmers to define my material.

This implies that the c command in X image directly returns the hemispherical reflectance RGB with no further calculation required. If my object is not a planar surface (e.g. a spherical rock) or has non-uniform lighting, it will have variable RGB colors due to self shading, specular highlights, and as a direct consequence of the non-uniform lighting. This would therefore decrease the accuracy of the method.

Can I mitigate this by only sampling a portion of the material in the same plane as the chart, where I can see that self-shading and specular highlights don’t occur?

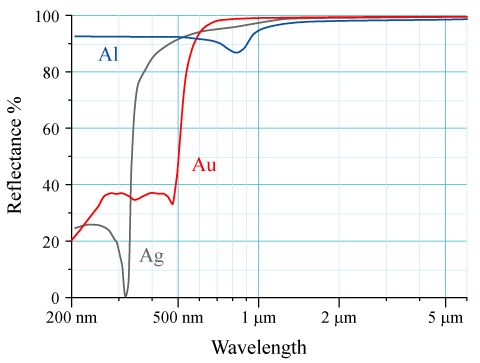

As the object approaches very specular, e.g. stainless steel, I suspect the macbeth method is useless as the colors would be of the reflected objects, not the actual material itself. For this, I assume I can just use existing material libraries and known metal values.

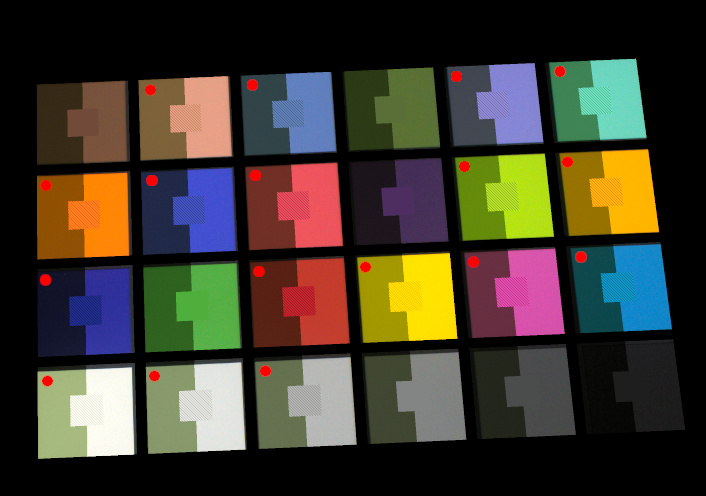

Question 2: If the macbeth measurement method is used, why would you still use the greyscale chart method?

The only reason I can think of is to perform a sanity check of the average reflectance. The book suggests that a 10%-increment greyscale chart can give a reflectance of within 5%. It also suggests that the macbethcal method only gives 10% accuracy.

Question 3: Where can I get a greyscale chart?

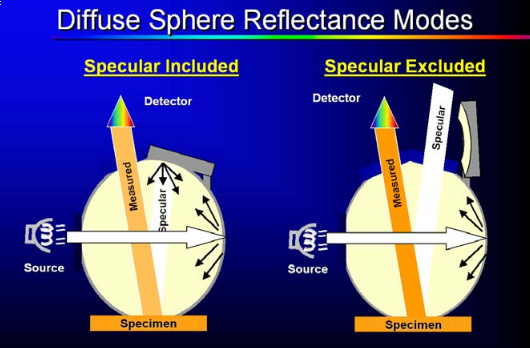

I’ve never seen one before, and the warning in the book suggests that the munsell chart shows “luminous reflectance”, not, uh, I guess, “hemispherical reflectance”, and so is inappropriate. What is “luminous reflectance”, and how do I know that the greyscale chart I am looking at shows the right type of reflectance? Also, can somebody link me to a greyscale chart available online, because my preliminary searches at local art supply stores (in Sydney, Australia) have turned up blank.

It also says I can build my own chart from neutral density samples from a graphic arts ink suppliers. Can anybody show me some examples of where to get these? How can I guarantee they represent a known hemispherical reflectance?

Question 4: How would I apply an image pattern calibrated with macbethcal?

If the image pattern is already calibrated, it should already contain accurate RGB reflectances. Therefore, what numbers should I put in the “bar” material below? From my current understanding, colorpict multiplies the reflectance values (linearly, I would assume?). Therefore, should I put 1 1 1 in bar?

void colorpict foo

7 red green blue foo.pic . frac(lu) frac(lv)

0

0

foo plastic bar

0

0

5 ? ? ? 0 0

I hope it all made sense, and sorry for the long post!