Thanks Greg! I am using rad, with QUALITY=HIGH so I believe it uses pfilt to scale it down for controlling aliasing. Here’s the attached getinfo:

scene_v1.hdr:

#?RADIANCE

rpiece -F scene_v1_rpsync.txt -PP pfuIzZYk -t 15 -vh 49.13434264120263 -vv 28.84154625502197 -vp 0.5879582166671753 -1.133952021598816 0.34054961800575256 -vd -0.23700669407844543 0.9646517634391785 -0.11521711945533752 -vu -0.027499962598085403 0.1118871420621872 0.9933403730392456 -x 2880 -y 1620 -dp 4096 -ar 127 -ms 0.041 -ds .2 -dt .05 -dc .75 -dr 3 -ss 16 -st .01 -ab 3 -af scene.amb -aa .1 -ad 1536 -as 768 -av 10 10 10 -lr 12 -lw 1e-5 -av 0 0 0 -ds .01 -dj .8 -dt 0 -ps 3 -pt .04 -o scene_v1.unf scene.oct

SOFTWARE= RADIANCE 4.2a lastmod Mon May 11 13:27:51 PDT 2015 by rgugliel on ubuntu

VIEW= -vtv -vp 0.587958 -1.13395 0.34055 -vd -0.237007 0.964652 -0.115217 -vu -0.0275 0.111887 0.99334 -vh 49.1343 -vv 28.8415 -vo 0 -va 0 -vs 0 -vl 0

CAPDATE= 2018:09:19 01:01:52

GMT= 2018:09:18 15:01:52

FORMAT=32-bit_rle_rgbe

pfilt -m .25 -x 960 -y 540 -p 1.000

EXPOSURE=2.117443e+00

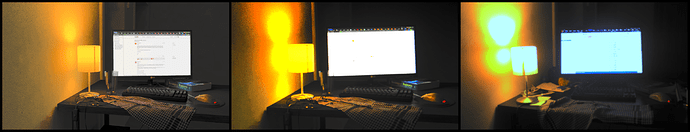

I wanted to share some analysis images today, the first is a photographic comparison of the scene. The first image is the render itself, the second image is a photograph of the render, and the third is the real-world photograph. I have converted all images to greyscale to prevent the issue of white balance.

Noticeable differences are the scotopic vision, camera overexposure of the lamp, the illumination of the monitor on the Radiance book, and the specular highlight of the keyboard. Comparing to my own eyesight, the scotopic vision and lamp exposure provided by Radiance is much more accurate than the photo. The keyboard specular highlight is probably due to my bad guess at a specular value, and book illumination is probably due to an inaccurate light distribution from the monitor.

For completeness, here is the same non-desaturated version.

An interesting psychological phenomenon I observe is that even though the Radiance image adjusted for the human eye is more accurate compared to what I visually see, personally I think the image looks more fake. My brain tells me it is a render and not a real scene. Whereas if I take a photo of it it (such as in the middle photo above) it looks more photorealistic despite being less accurate as to human vision. I guess I am trained to expect things like overexposed lamps and underexposed dark rooms, and my brain can compensate.

Update:

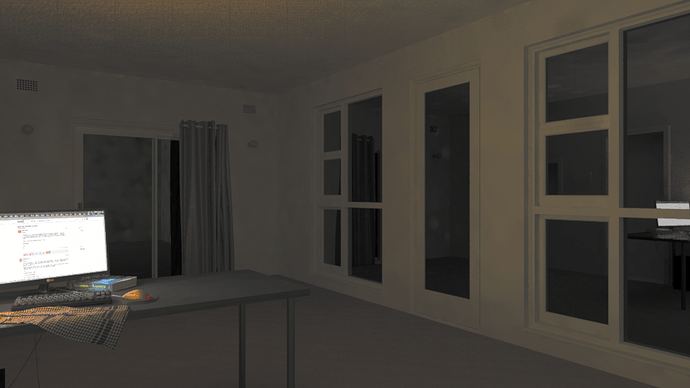

- New camera angle!

- Modelled walls, sliding door, wall sconces, curtain, ventilation grille, windows, and side door

- Measured door frame, door handle, curtain, and sconce globe material