Dear Community, Iam still interested in irradiance measurements using all sky imager cameras. I would like to know how to correctly transform from cie 1931 to srgb spectral response, Iam looking for book or somekind of documentation where the math is explain. Any hint/help is highly appreciated.

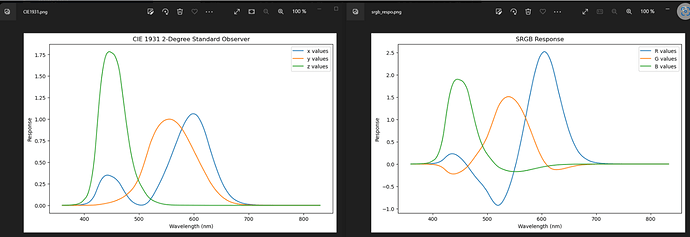

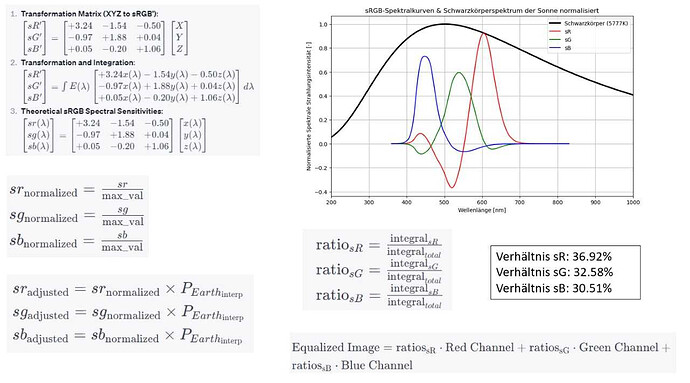

Heres my first try, but I think I made some mistakes (I use python). In ende that shall be the result.

We are currently thinking about calibration with a solar spectroradiometer of srgb images…

Kind regards

Paul

Hi Paul,

since you are doing this in Python, I wonder wether you came across the python-colormath module. On its website, the various conversions are listed, among them XYZ to sRGB.

The site also links to some resources, among them http://www.brucelindbloom.com. Here you have an explanation of the math behind the XYZ to sRGB conversion. I did not verify that this is identical to what is implemented in the colormath module, but since the latter is open source you can verify this yourself.

Best, Lars.

From linear values you can use the xyz_srgb.cal file included with Radiance. Here’s how you would take XYZ input values and produce RGB in a CCIR-709 primary space (both linear):

rcalc -f xyz_srgb.cal -e ‘$1=R($1,$2,$3);$2=G($1,$2,$3);$3=B($1,$2,$3)’

(Use double-quotes if running on Windows.)

-Greg

Hello Lars,

thanks for these useful ressources, really they are great! I tried out colormath library and got some results. Unfortunately I cant find a trustworthy source for confirming the results I made with colormath:

Hello Greg, thanks for instructions, I will also try them soon, maybe you can take a look at the plot i just posted, can you confirm the results?

Kind regards

Your plots correspond to a specific set of primary spectra. There is not one unique solution that takes you from RGB or XYZ values to spectra. There are several methods for generating such spectra, but it is an underdetermined problem to go from 3 to infinite dimensional data, so some other parameters (i.e., energy, metamerism) must be optimized. I can point you to research on how to perform such optimizations, but you should better explain your reasons for doing this.

Cheers,

-Greg

Hey Greg, I thought there should be a unique solution since the sRGB primaries are defined and since the camera sensor is color calibrated it should transform the captured arrays to sRGB Images.

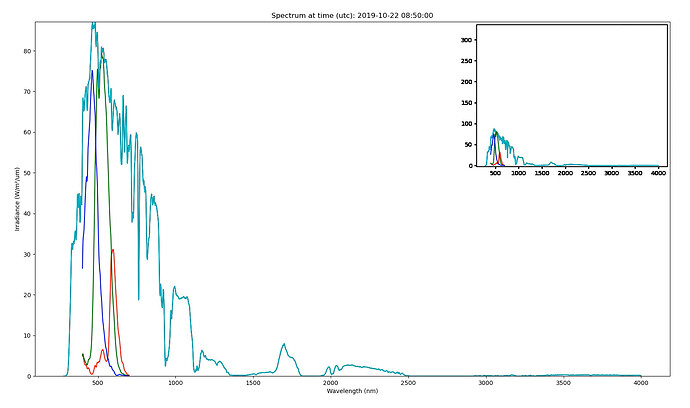

In the following Screenshot I put the sRGB curves relative to the solar spectrum (we are using wisers spectroradiometers 711&712). We would integrate over that responses and estimate and Broadband Irradiance Ratio for better estimation of the solar irradiance, since the silicium camera sensor (sony imx477) is spectral limited to roughly 390-700nm.

Color science is a tricky business, and you should probably spend some time with a textbook or two because there really is a lot to learn. I can recommend Reinhard’s Color Imaging: Fundamentals and Applications as an excellent resource.

To put it in as few words as possible:

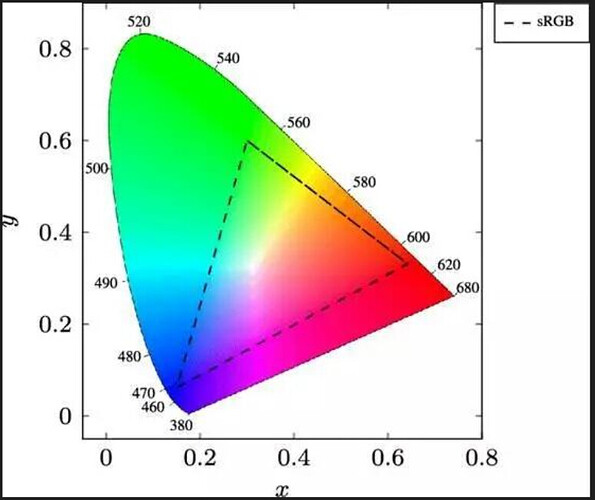

A) RGB primaries are defined in terms of CIE (x,y) chromaticities, and do not have spectral functions associated with them except in specific instances of display monitors, and these differ substantially from one display to another.

B) To get (x,y) chromaticities from display primaries or colors out in the world, you apply the CIE standard observer curves to your color specta, and these attempt to mimic the average young person’s color sensitivity. You cannot then go from (x,y) back to spectra, as a lot of data reduction happened there.

C) There is no such thing as “camera color primaries,” and the mapping from sensor values to sRGB (or any other color space) is another underconstrained problem, so we optimize for an expected or preferred part of the color space where we want the greatest accuracy.

Each of the above could occupy a chapter or for (C) an entire text to explain fully, so I’m asking you to look into it if you want to know more. It’s a fascinating science that sits between physics and human perception of the world, which we still don’t fully understand.

Cheers,

-Greg

Ok thanks Greg!

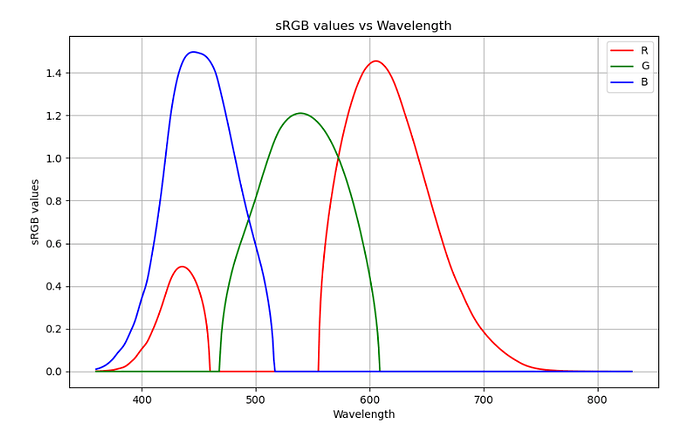

I found the theoretically srgb spectral curves online here:

https://www.researchgate.net/publication/221209826_Concept_of_Color_Correction_on_Multi-Channel_CMOS_Sensors

Within the xyz color space the srgb color space is defined, so I thought it should be pretty straight forward to get back to the spectral responses from there…

What exactly is the problem?

It looks like the authors have just taken the x_bar(lambda), y_bar(lambda) and z_bar(lambda) standard observer sensitivity functions and run them through an XYZ->RGB matrix, yielding sensitivity curves with negative lobes that may then be applied to an arbitrary spectrum to give you sRGB (linear) primary quantities. Mathematically, this is exactly the same as the usual process of integrating the x_bar, y_bar, and z_bar observer functions against against a measured spectrum and applying the XYZ->RGB matrix afterwards. They just saved you a step.

This still doesn’t tell you how to take a CMOS sensor with a different set of sensitivity functions that are NOT a simple matrixing of the CIE standard observer and convert that reliably to sRGB. Most sensitivity functions are not linearly related to the CIE curves, at least partly due to the inability of sensors to have negative lobes in their responses.

I’m not sure if this helps. Again, there are textbooks out there on this topic, and I am not the world’s expert.

Cheers,

-Greg